Sync tables from Snowflake to PostgreSQL

Glenn Gillen

VP of Product, GTM

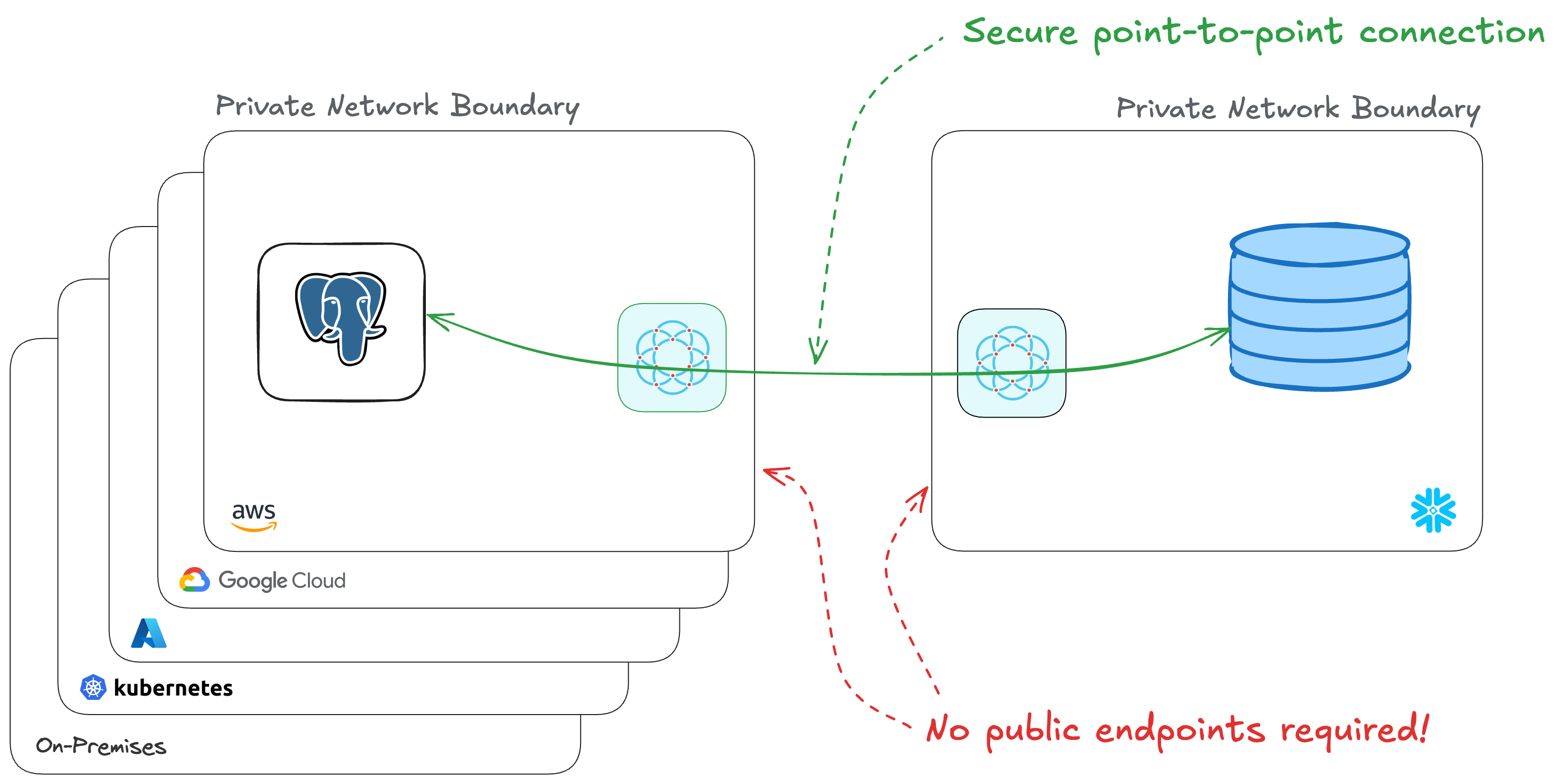

Snowflake is an amazing place to store data from across your entire organization so that you have a single place to aggregate it, query it, and find new insights. What happens when you want your apps to act on those aggregates and insights though? You need a way to stream the changes in real-time back out to your transactional database.

Introducing the Snowflake Push to PostgreSQL Connector!

Quick links

- Push to PostgreSQL Connector in the Snowflake Marketplace

- Ockam Node for Amazon RDS PostgreSQL in the AWS Marketplace

Snowflake 💙 PostgreSQL

Snowflake is The Data Cloud and the place to support workloads such as data warehouses, data lakes, data science / ML / AI, and even cybersecurity. This centralization brings a huge amount of convenience through breaking down data silos and allowing teams to make smart data-informed decisions.

After enriching the data and finding new insights, those insights need to make their way back out to the other apps and business systems that can act upon them. PostgreSQL is a powerful, open-source relational database system that's widely used for storing and managing structured data. Connecting to your PostgreSQL database can be problematic depending on your network topology. It would be convenient to give the database a public address, but that's a significant increase in risk for a system that handles a lot of important data. Managing IP allow lists and updating firewall ingress rules improves security but can be cumbersome to manage. Alternatives like PrivateLink are better, but they too can be cumbersome to setup and require your systems to be on the same public cloud and in the same region.

In this post I'm going to show you how to securely connect Snowflake to your private PostgreSQL database, in just a few minutes. We will:

- Setup a Snowflake Stream to capture changes to a table in Snowflake

- Setup a PostgreSQL database in AWS

- Connect Snowflake to PostgreSQL with a private, mutually authenticated, end-to-end encrypted connection — without needing to expose either system to the public internet!

Snowflake streams

Snowflake streams are a way to capture every change made to a table (that is, every insert, update, and delete) and record it somewhere else. You may hear it called Change Data Capture (CDC) and it's an effective way to respond to changes in the data that you care about.

Create a stream

Amazon Relational Database Service (RDS) for PostgreSQL

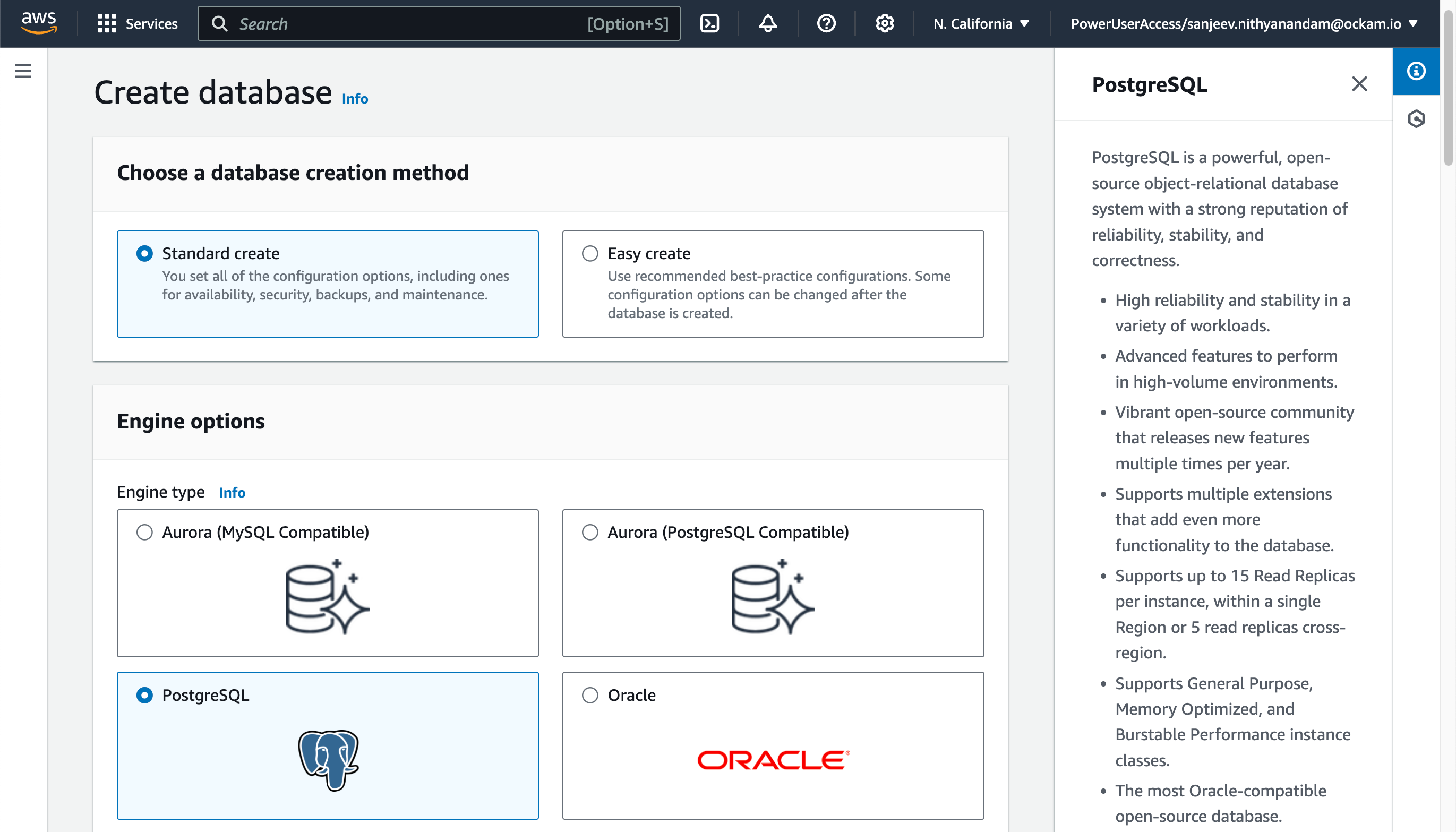

We're going to provision an Amazon RDS PostgreSQL Database so we can see an end-to-end experience of data moving from Snowflake to PostgreSQL. If you have an existing PostgreSQL database you're able to use you can skip this step.

Create a PostgreSQL database

Within your AWS Console search for

RDS in the search field at the top and select the matching result. Visit the

Databases screen, and then click Create Database.

The Standard Create option provides a good set of defaults for creating a

RDS Database, so unless you've previous knowledge or experience to know you

might want something different I'd suggest choosing "PostgreSQL" and confirming the details and

then clicking Create database at the bottom of the screen.

Once you've started the database creation it may take about 15 minutes for provisioning to complete and for your database to be available.

Create the destination tables

Connect Snowflake to PostgreSQL

We've created a stream, PostgreSQL is running, our table is waiting for data. It's now time to connect everything! The next stage is going to complete the picture below, by creating a point-to-point connection between the two systems — without the need to expose any systems to the public internet!

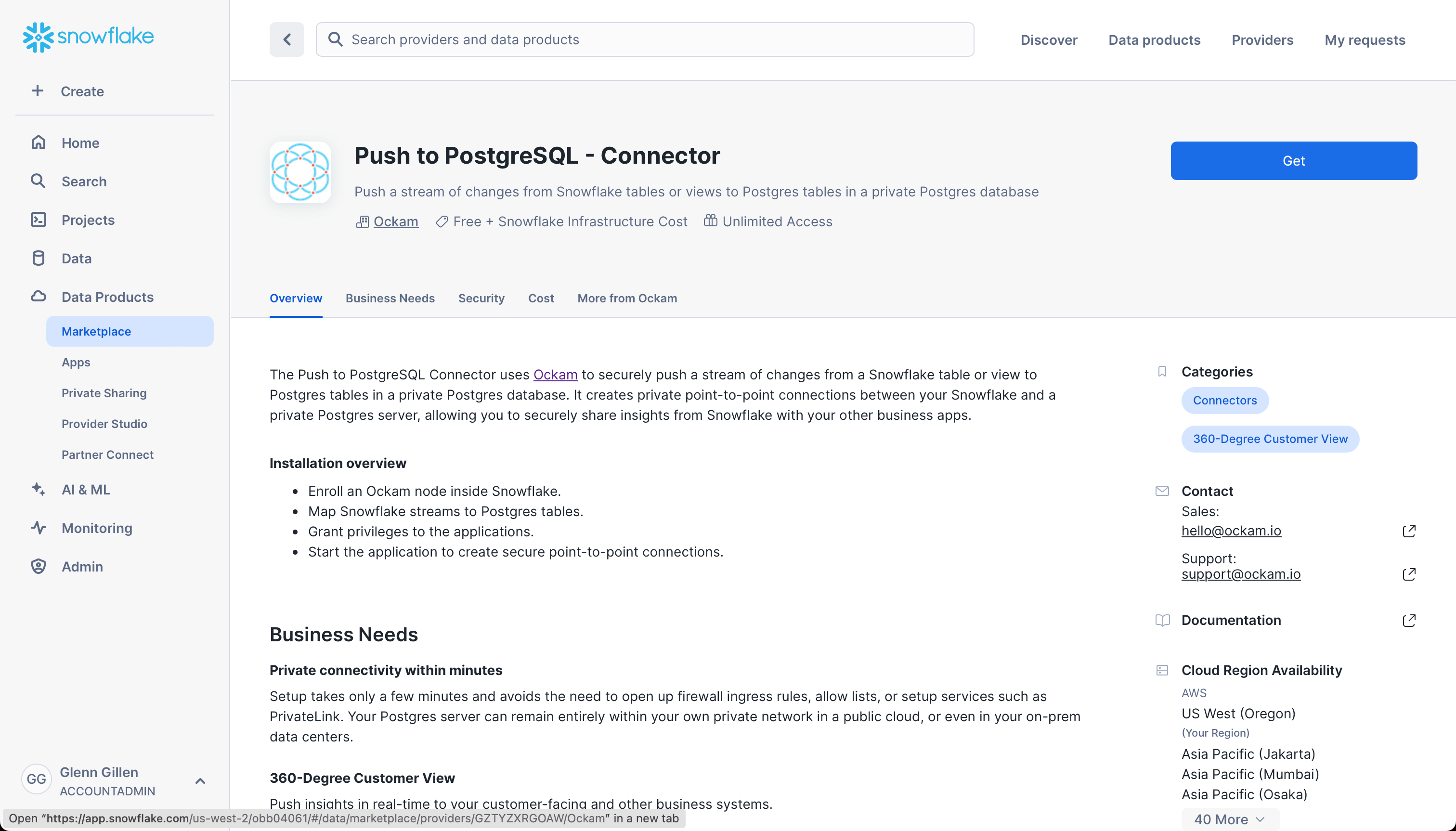

Launch the Snowflake Native App

The Snowflake Push to PostgreSQL Connector by Ockam is available in the Snowflake Marketplace.

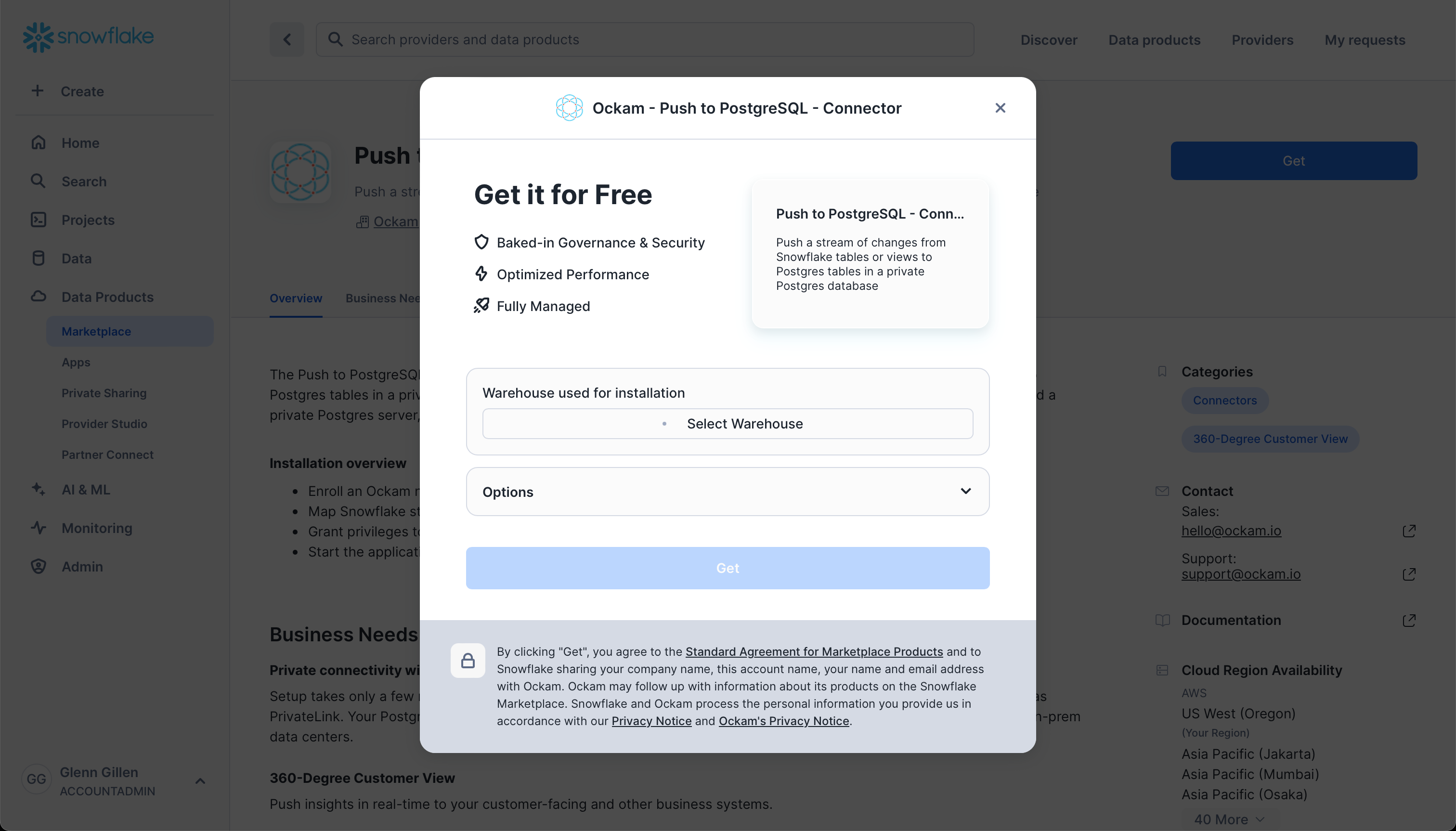

Select a warehouse

The first screen you're presented with will ask you to select the warehouse to utilize to activate the app.

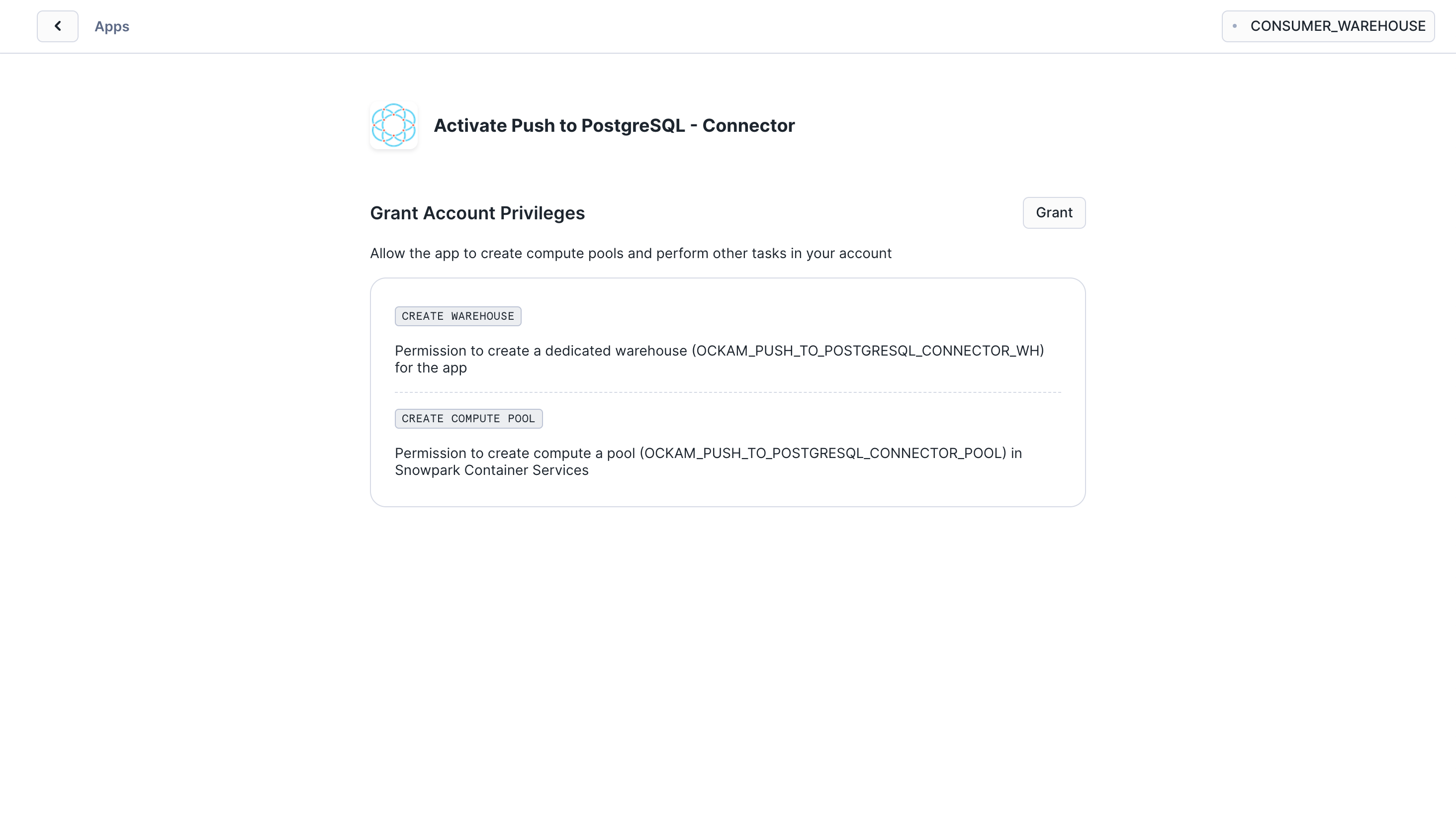

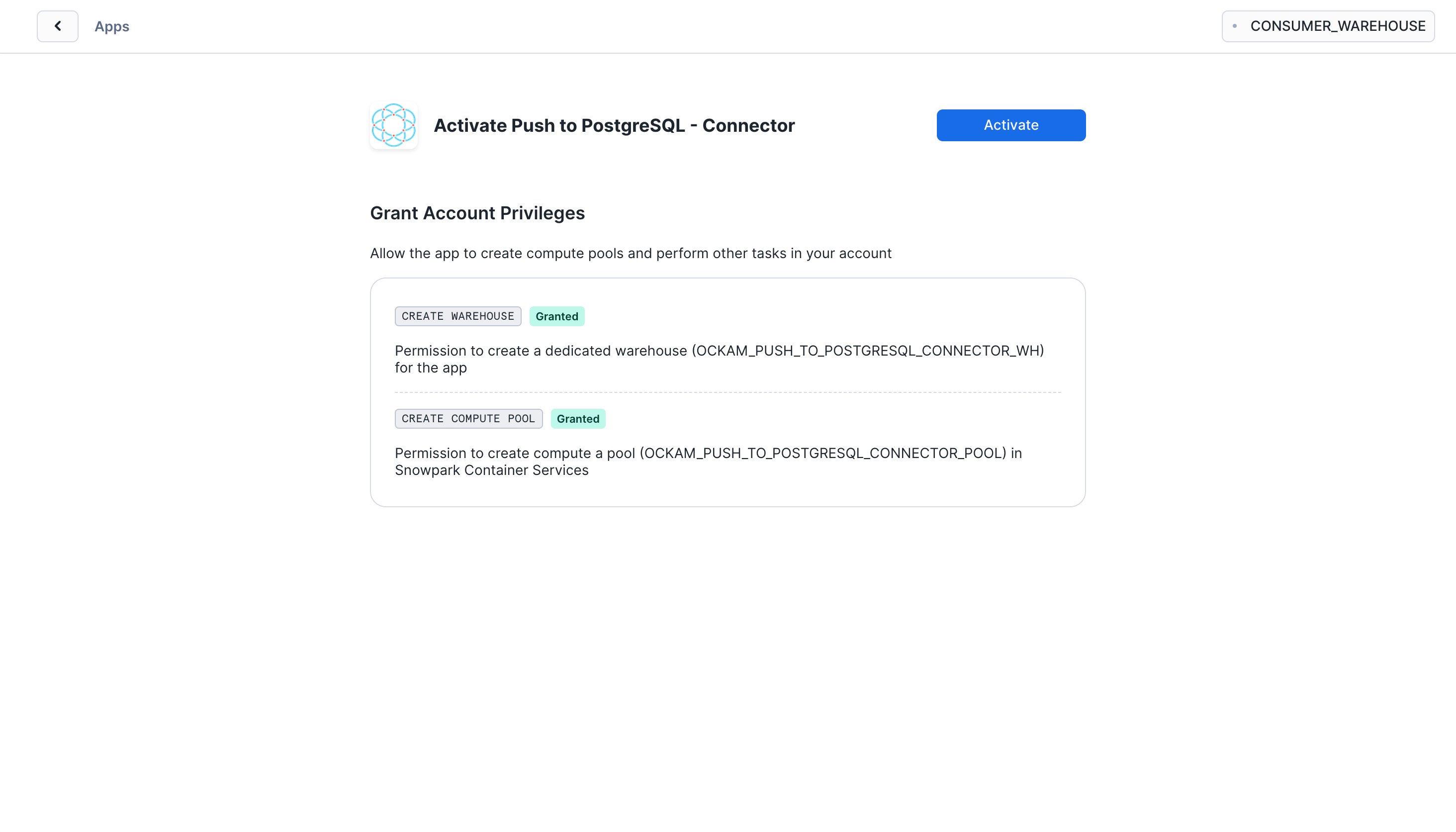

Grant account privileges

Click the Grant button to the right of this screen. The app will then be

automatically granted permissions to create a warehouse and create a compute

pool.

Activate app

Once the permissions grants complete, an Activate button will appear. Click

it and the activation process will begin.

Launch app

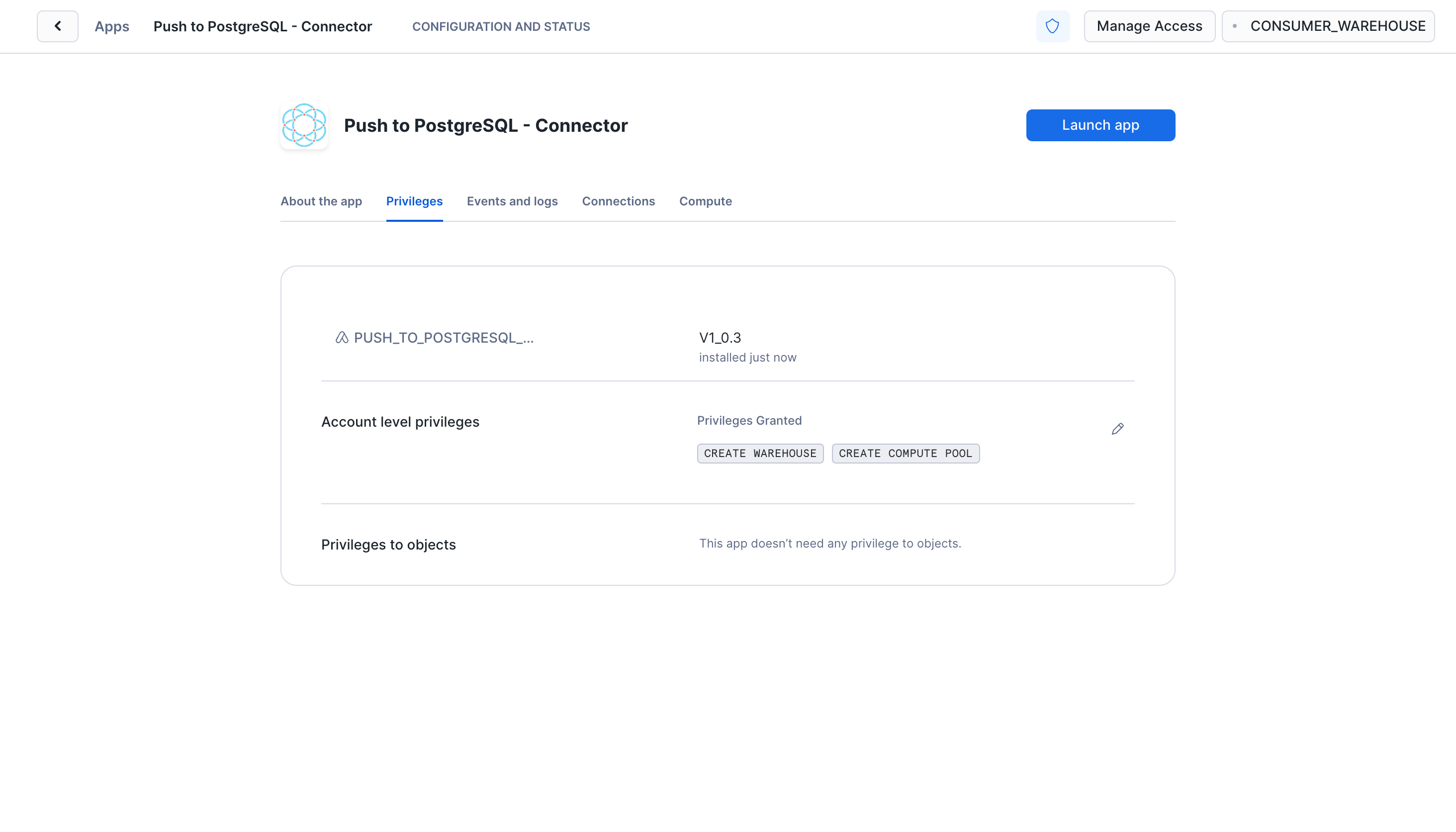

After the app activates you'll see a page that summarizes the

privileges that the application now has. There's nothing we need

to review or update on these screens yet, so proceed by clicking the Launch app button.

Setup Ockam

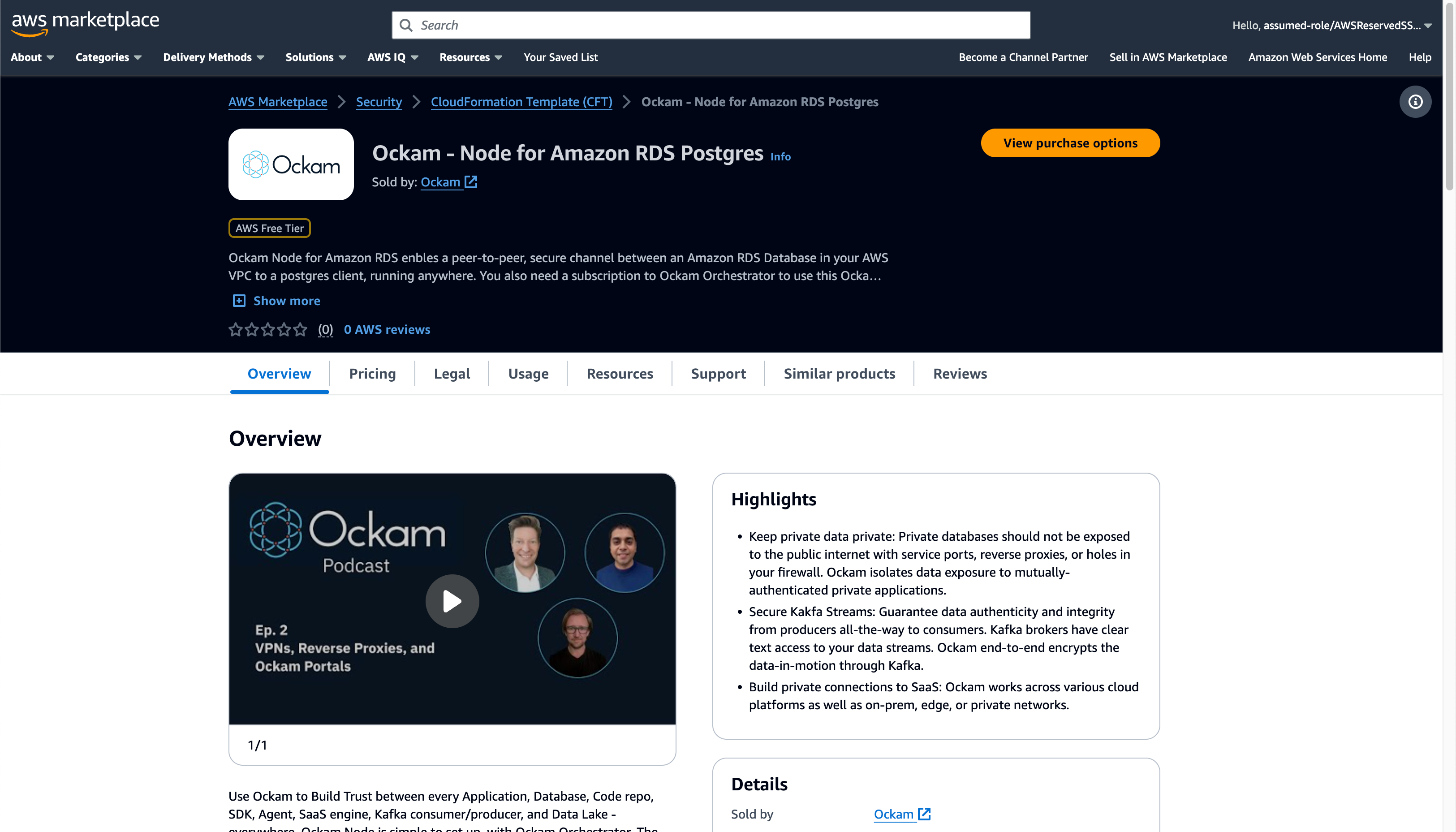

Option 1 - Run an Ockam node next to PostgreSQL via the AWS Marketplace

The Ockam Node for Amazon RDS is a streamlined way to provision a managed Ockam Node within your private AWS VPC. If you'd prefer the ability to deploy your Ockam Node yourself (for example on an existing machine or in a container) jump to our instructions on how to run an Ockam Node manually.

To deploy the node that will allow Snowflake to reach your Amazon RDS PostgreSQL database visit

the Ockam Node for Amazon RDS PostgreSQL listing in the AWS Marketplace, and click the Continue to Subscribe button, and then

Continue to Configuration.

On the configuration page choose the region that your Amazon RDS cluster is

running in, and then click Continue to Launch followed by Launch.

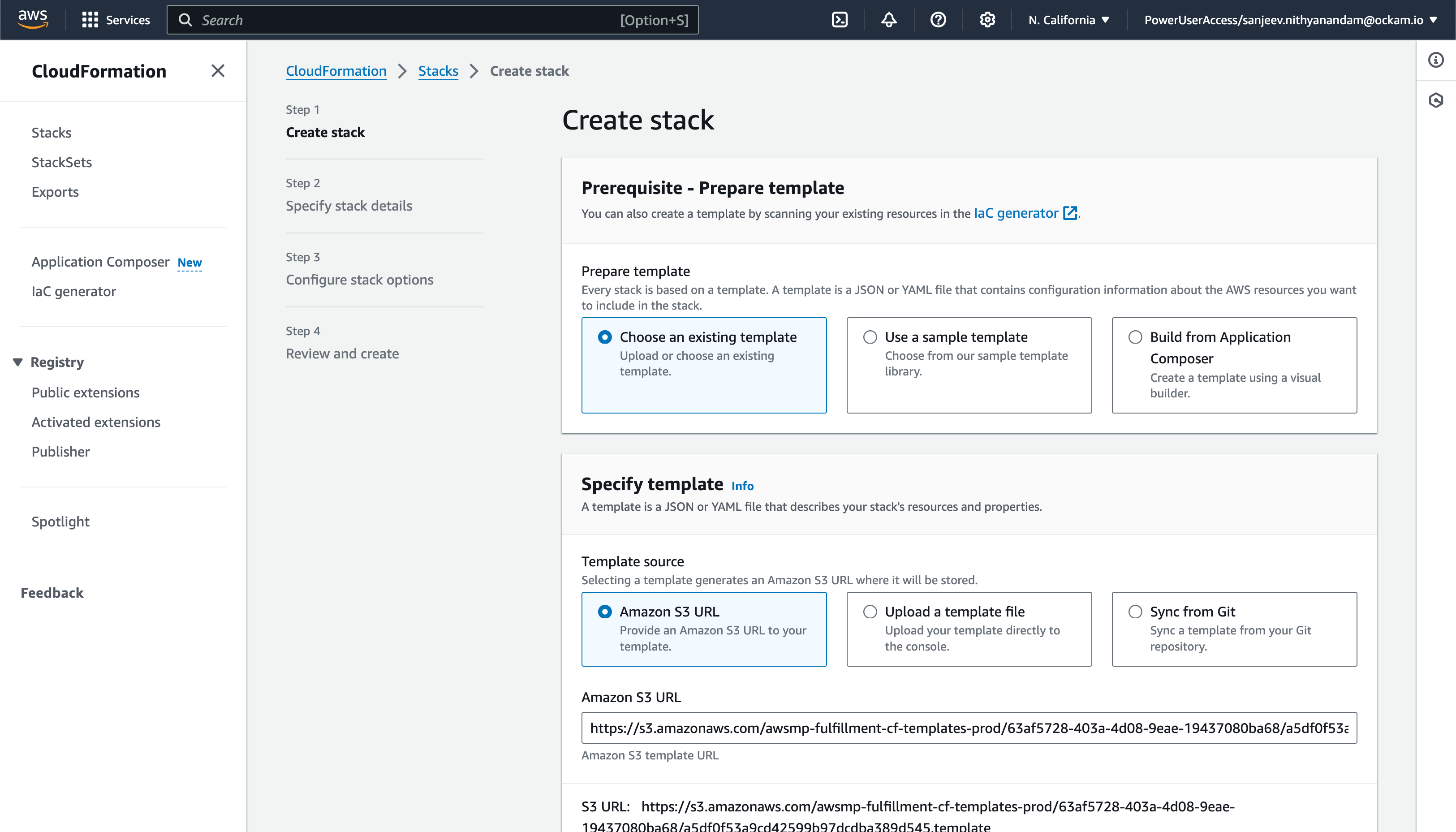

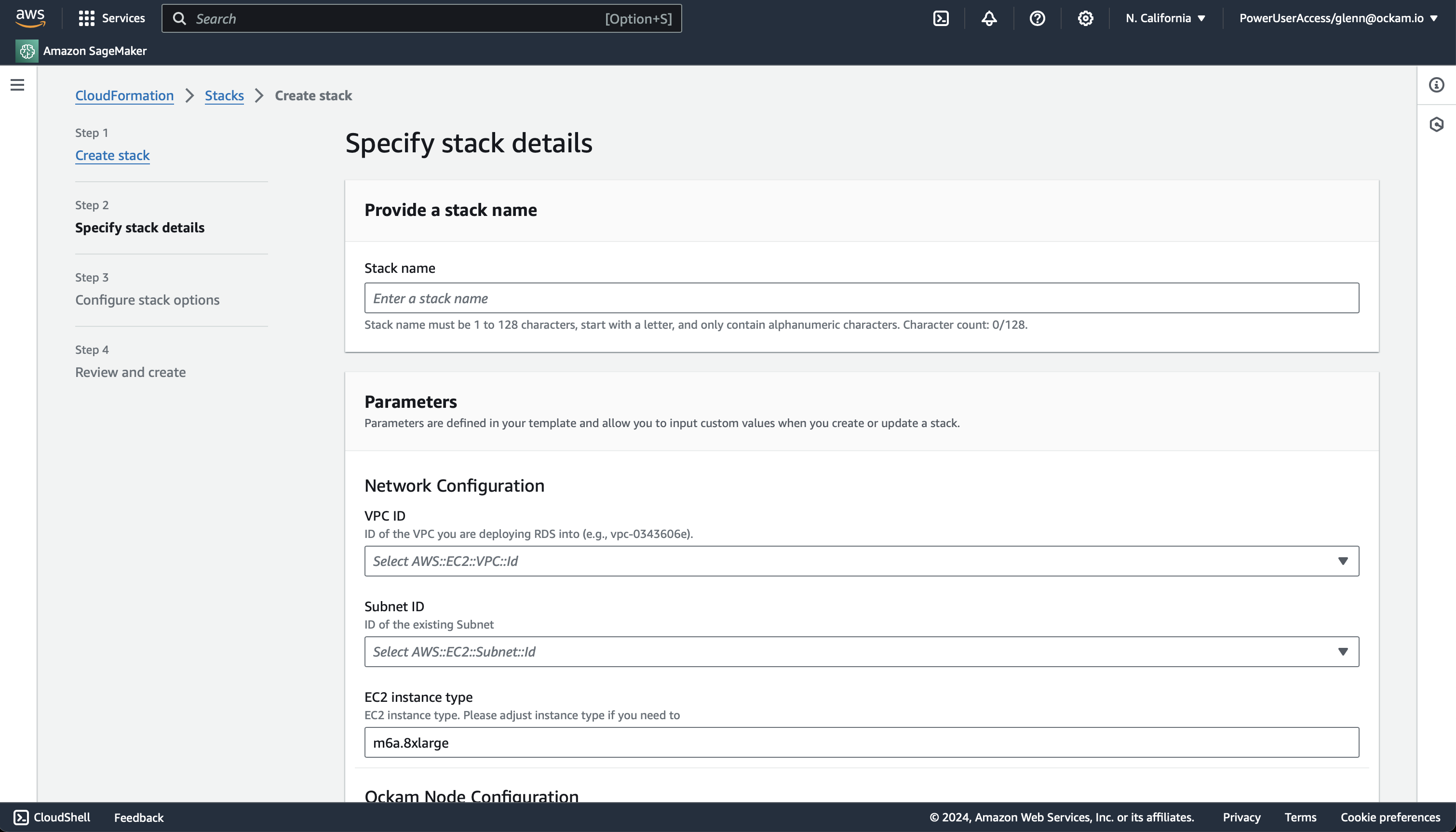

Enter stack details

The initial Create Stack screen pre-fills the fields with the correct

information for your node, so you can press Next to proceed.

Enter node configuration

This screen has important details to you need to fill in:

- Stack name: Give this stack a recognisable name, you'll see this in various locations in the AWS Console. It'll also make it easier to clean these resources up later if you wish to remove them.

- VPC ID: The ID of the Virtual Private Cloud network to deploy the node in. Make sure it's the same VPC where you've deployed your RDS instance.

- Subnet ID: Choose same subnet where your RDS instance is deployed.

- Enrollment ticket: Copy the contents of the

postgres.ticketfile we created earlier and paste it in here. - RDS PostgreSQL Database Endpoint: In the Connectivity & security for

your Amazon RDS Database you will find Endpoint details. Copy the

Endpointvalue for the Private RDS Database that's in the same subnet you chose above. - JSON Node Configuration: Copy the contents of the

postgres.jsonfile we created earlier and paste it in here.

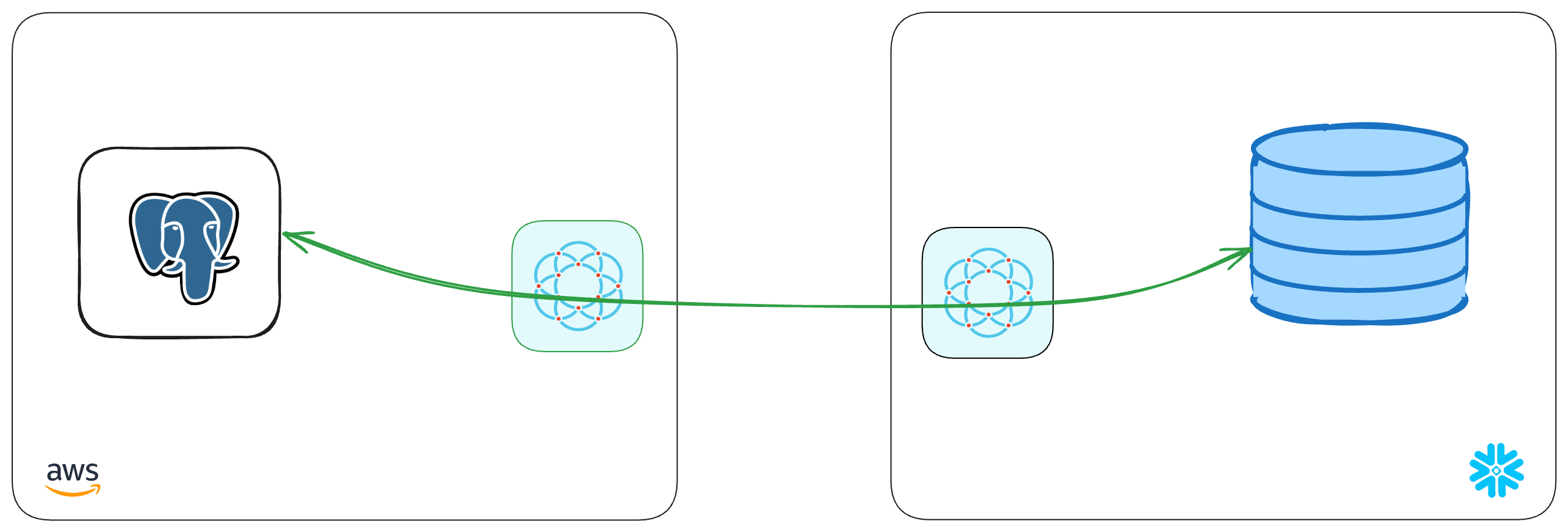

We've now completed the highlighted part of the diagram below, and our Amazon RDS PostgreSQL node is waiting for another node to connect.

Option 2 - Run an Ockam Node next to PostgreSQL manually

Connect the Ockam node in Snowflake

Configure connection details

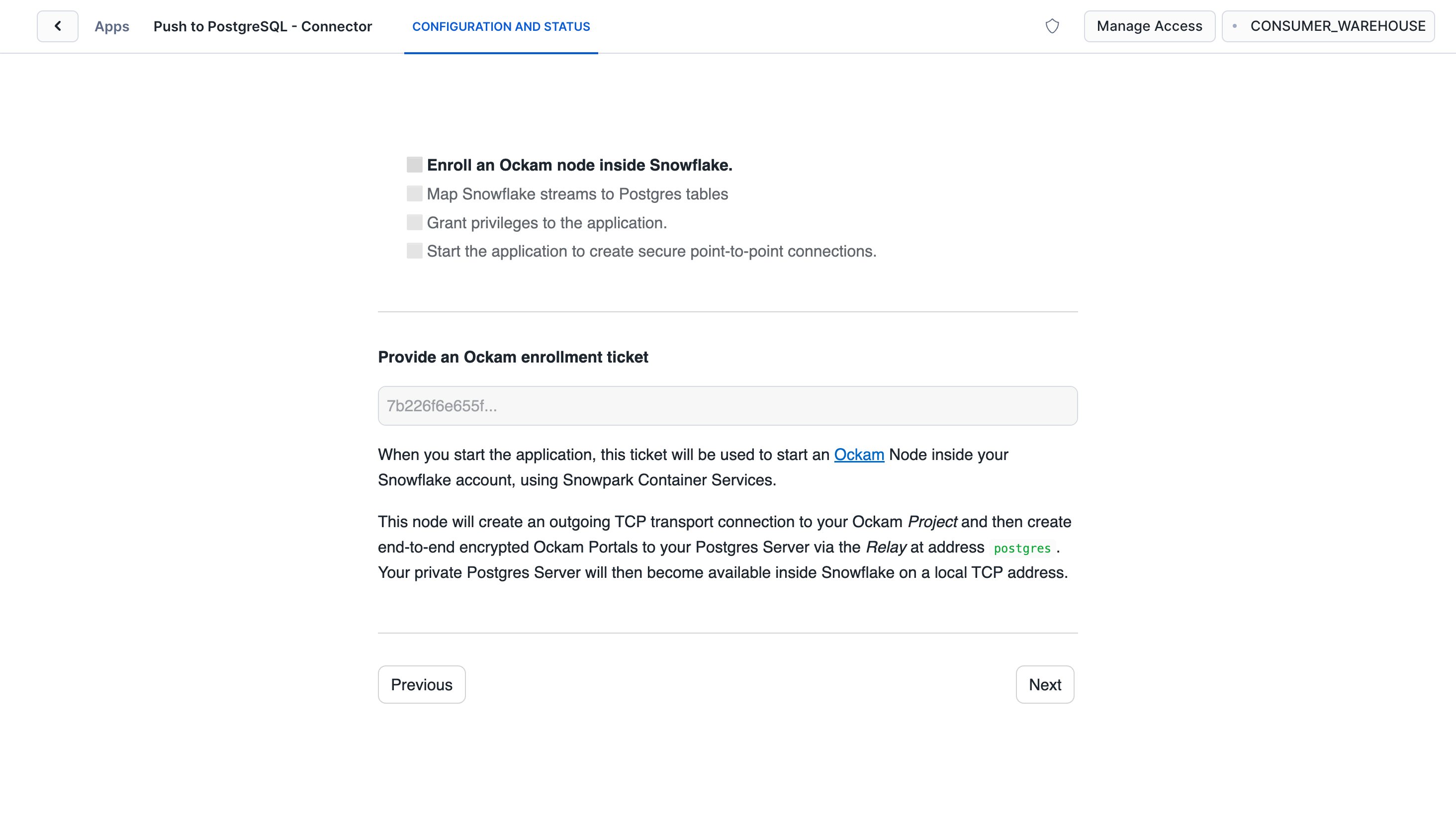

Click "Get started" to open the Snowflake setup screen.

Take the contents of the file snowflake.ticket that we just created and paste

it into "Provide the above Enrollment Ticket" form field in the "Configure app"

setup screen in Snowflake.

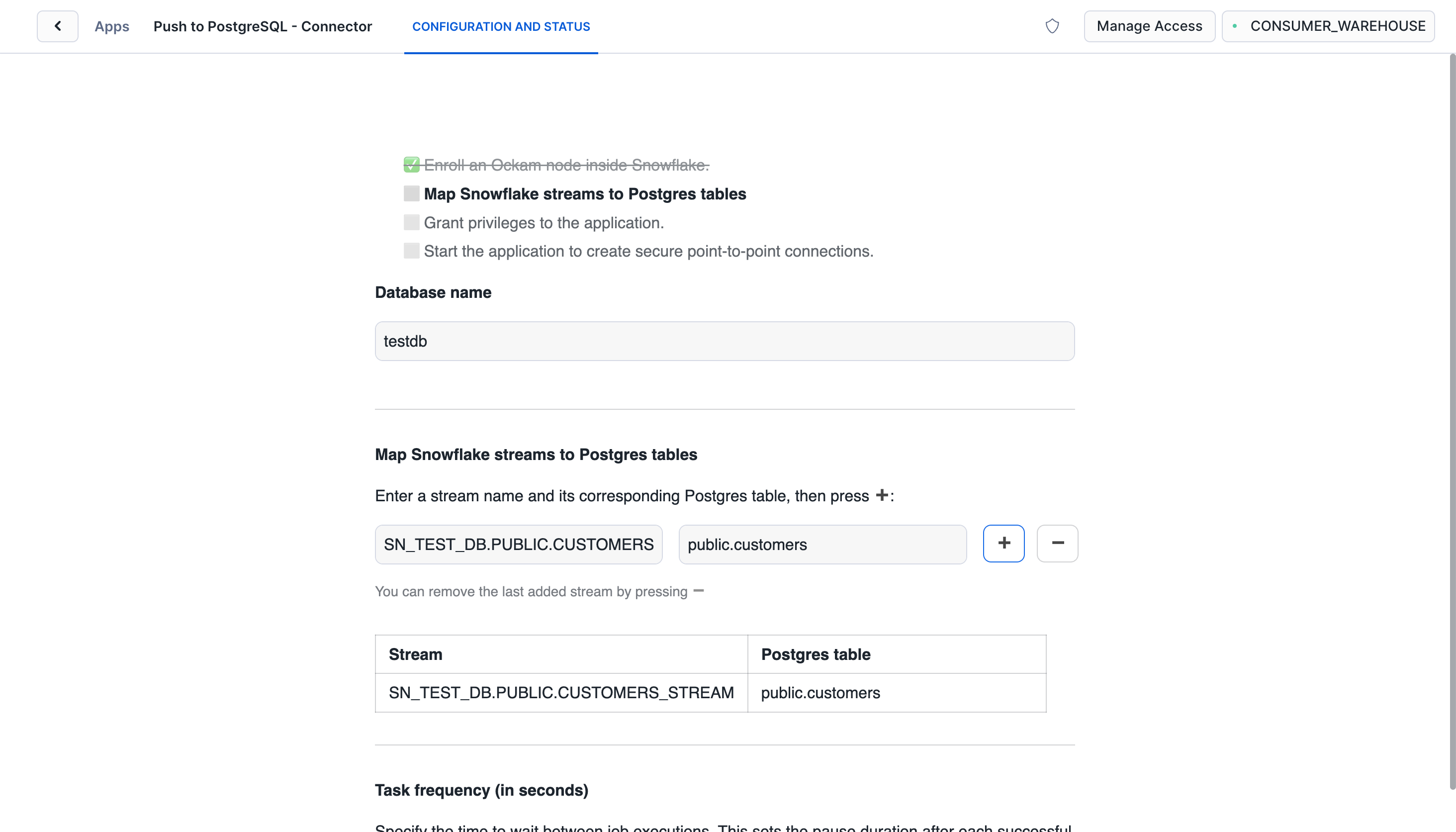

Map Snowflake stream to PostgreSQL table

Snowflake sends each stream of changes to a table in PostgreSQL database, and we need to define the database and mapping between each stream and table. Enter the stream you want to send (in the format of database.schema.stream), and then enter the name of the tables in PostgreSQL.

The table(s) should already exist in PostgreSQL database! If you've not created the destination tables go and do that now.

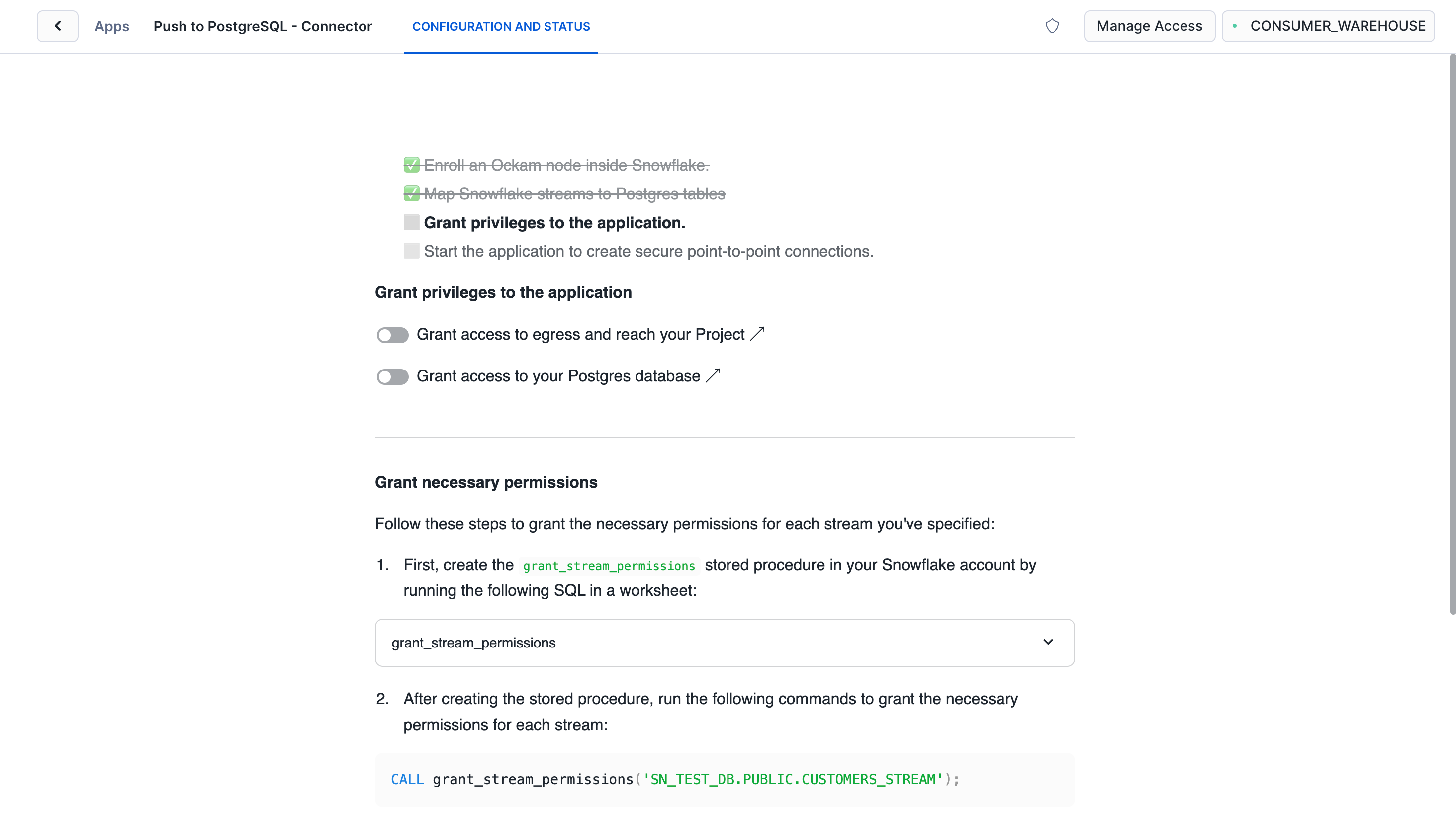

Grant privileges

To be able to authenticate with Ockam Orchestrator and then discover the route to our outlet, the Snowflake app needs to allow outbound connections to your Ockam project.

Toggle the Grant access to egress and reach your Project and approve the connection by

pressing Connect.

Toggle the Grant access to your postgre database and enter the username and password for your

PostgreSQL database and store it as a secret in snowflake.

Now that you've created the stored procedure, it's time to run it. Copy the

code below and run it in a Snowflake Worksheet, replacing

database.schema.stream with the correct value for your stream:

_10CALL grant_stream_permissions('database.schema.stream');

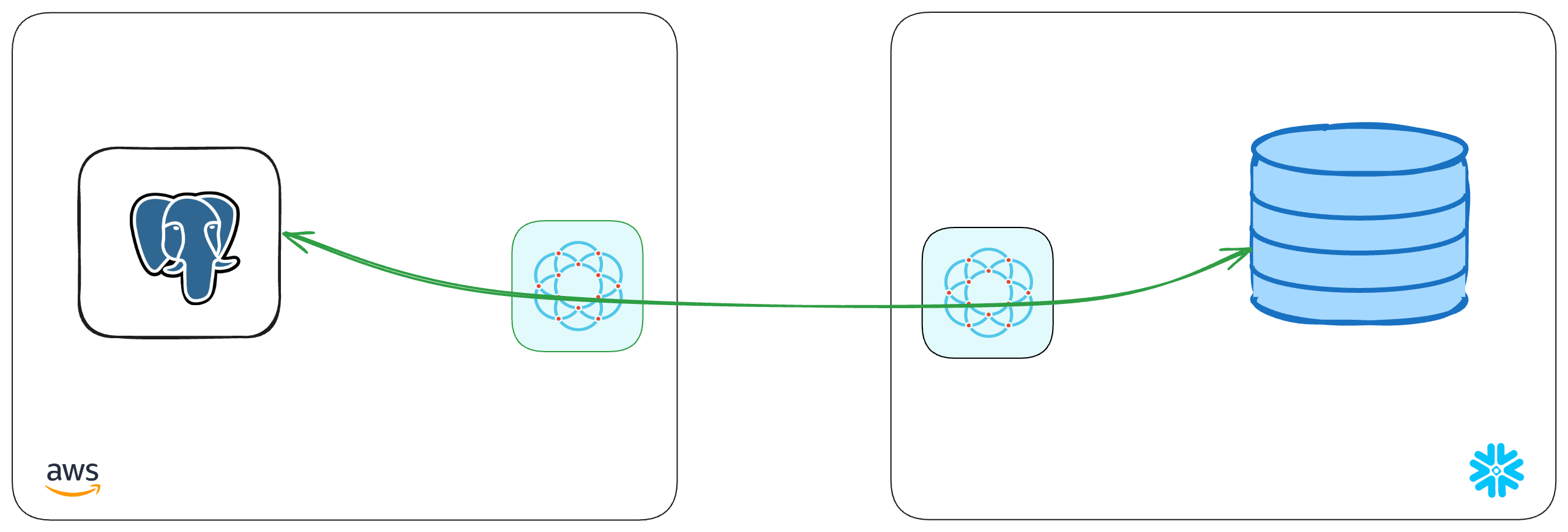

With that, we've completed the last step in the setup. We've now got a complete point-to-point connection that allows our Snowflake warehouse to securely push data through to our private PostgreSQL database.

Next steps

Any updates to your data in your Snowflake table will now create a new record in your Snowflake stream, which are then sent over your Ockam portal to your table in PostgreSQL. To see it in action insert a row into your Snowflake table, then use your usual SQL tooling to see the record arrive in your table.

From here you can take advantage of the existing PostgreSQL infrastructure and ecosystem. Write consumers that update data as required in your CRM and marketing systems, or use it as the foundations for a highly scalable reverse ETL process. The possibilities are limitless!

If you'd like to explore some other capabilities of Ockam I'd recommend:

- Creating private point-to-point connections with any database

- Streaming real-time changes from Snowflake to Kafka

- Adding security as a feature in your SaaS product

Previous Article

Replicate tables from PostgreSQL to Snowflake

Next Article

Build completely private APIs in Snowflake