Secure & private connections to enterprise LLMs

Glenn Gillen

VP of Product, GTM

Every company's business data is their gold mine and there's every company sitting on these gold mines”

— Jensen Huang, CEO & Founder @ NVIDIA

Building an enterprise AI system means enhancing a Large Language Model (LLM) by augmenting it's knowledge through access to additional data or tools. LLMs are already trained on all the data publicly available on the internet, so in an enterprise AI context the most valuable thing you can do is connect an LLM to the huge amounts of unique business data you've accumulated over many years. It's also the biggest challenge in building enterprise AI systems as you have legitimate reasons for distributing all your data and tools across various locations and networks. It's unlikely that you have the required GPUs and compute to run the LLMs available in all these locations. Data is spread everywhere, LLMs and compute are somewhere else, nothing is where you need it to be!

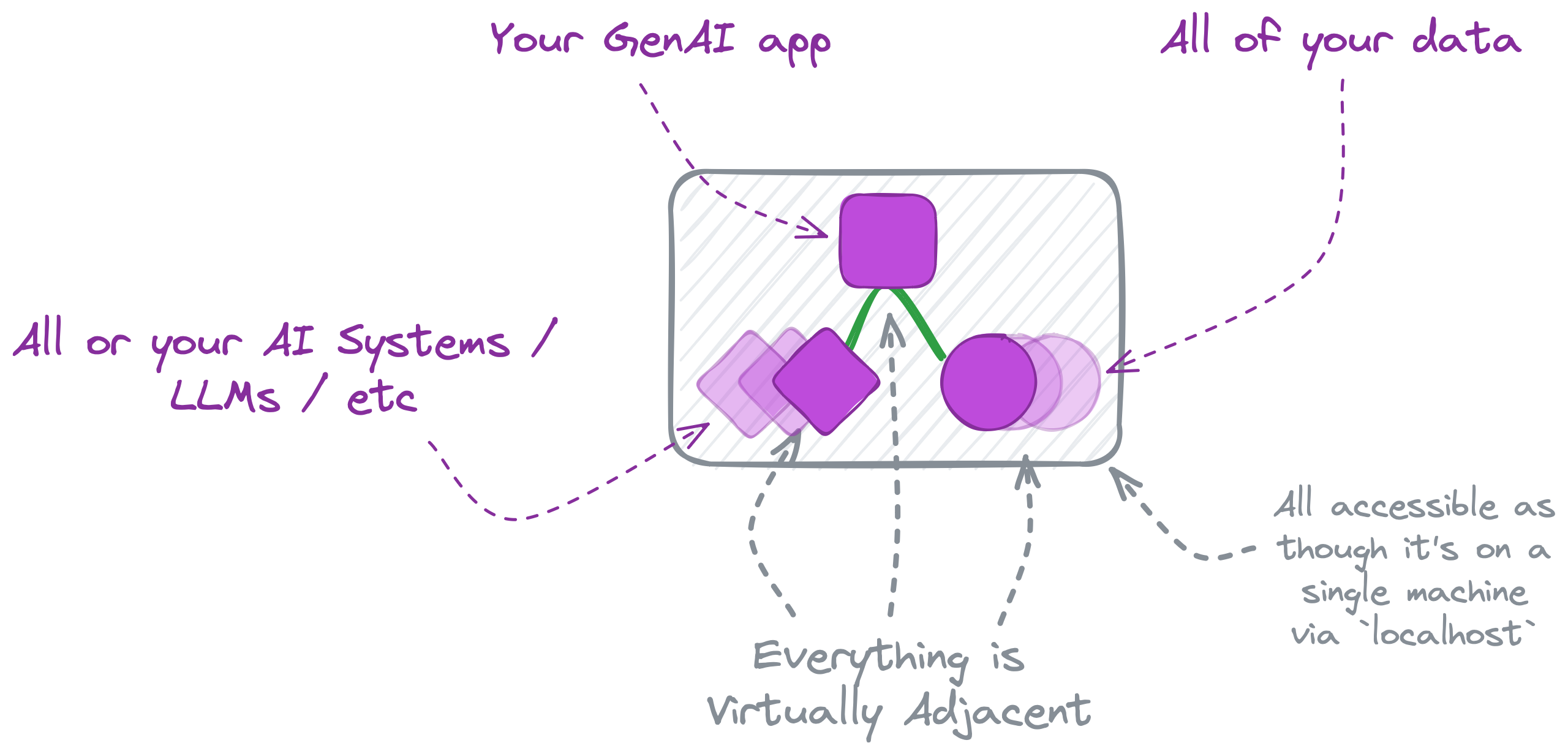

Ockam solves this problem through the use of "virtual adjacency":

Without the need to replatform, rearchitect your infrastructure, or even consolidate or migrate your data, Ockam makes all these dependent systems available to each other as though they were all virtually on the same machine. Your enterprise AI agents can establish secure and private point-to-point connections with any data or system they need to access, and you can rapidly build AI systems without the need to change your network or expose any of your data to the public internet.

In this post I'm going to walk you through how to setup an Ockam Portal, and show how Ockam's approach to virtual adjacency can make your AI systems privately available wherever your data is.

Data analytics & insight from LLMs

Before we get into how to set this up, I'm going to jump straight to the end and give you an example of what's possible, in less than 10 minutes. We're going to connect a private AI model running in the cloud with some private data in an "on-prem" (my laptop!) environment.

Remember that for all this I am not using any public APIs or making any of my systems or data accessible from the public internet.

Adding AI into the process has taken minutes to setup and it now focuses my attention on validating the alternatives that have the highest probability of being "interesting". It both reduces the time it takes for me to get started by orders of magnitude and directs my effort to the most valuable places to start.

Just imagine the amazing things you can do once I show you how quick it is to connect these things together!

Bringing LLMs, AI Agents, and data closer to each other

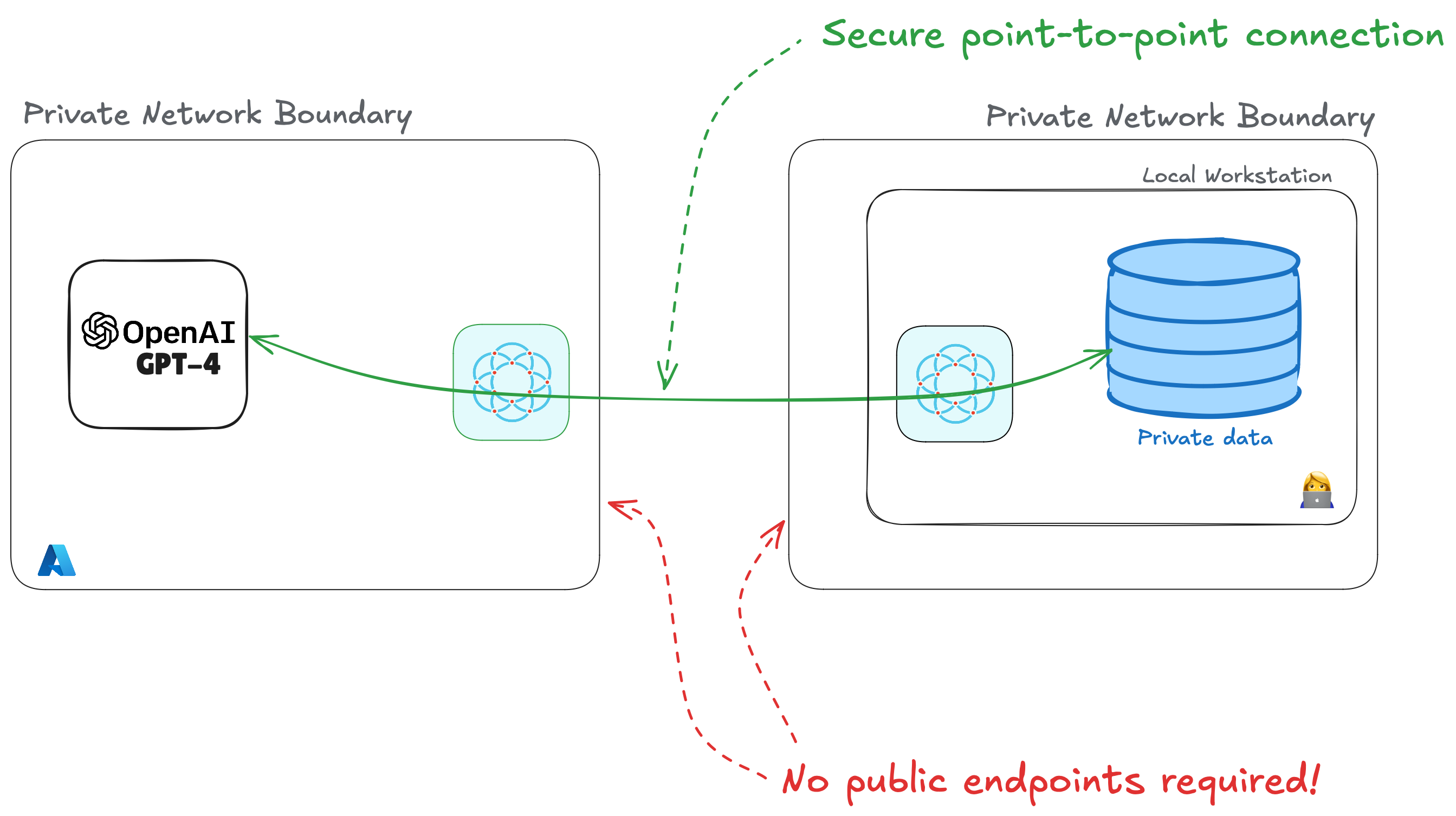

I achieved all this in less than 10 minutes on my laptop connected to my private home network, communicating with a private OpenAI service in a private Azure network, over an Ockam Portal.

An Ockam Portal does something that feels like magic; It virtually moves your

remote application through the Portal so that it's available on localhost,

something we refer to as "virtual adjacency". I didn't need to change my network

layer configurations. I didn't need to move a database into the cloud. I didn't

need to have a bunch of GPUs or spare compute available locally to run a model.

It does this by having both ends of the portal establish a mutually authenticated point-to-point connection with each other. The Ockam Protocol takes care of automatically generating and exchanging keys between those nodes, and then automatically and regularly rotating those keys. The end result is a secure and private connection that is end-to-end encrypted with none of the nodes directly accessible from the public internet.

Once the portal is setup my python code is able to talk to the Azure OpenAI

service as though it's a service running directly alongside my code on

localhost.

This isn't limited to Python scripts pushing CSV files to Azure OpenAI from a laptop. Anything that needs to connect data to an AI service can bring those systems virtually together in a secure way. Any agentic system, workflow, batch or ETL process, RAG system, and even product integrations can connect with whatever data you need wherever it's located — from those on-prem systems with decades of data in them like Oracle and SAP, to your BigQuery tables that just happen to be in different cloud to where you need them.

Interacting with LLMs locally

I've packaged a demonstration of this into a couple of scripts and a Docker configuration so you can try it yourself with minimal effort. The full implementation is available on GitHub, though I'll walk through the relevant parts in detail to explain how it all works. Included in the repo is:

- A set of scripts that will:

- Install the latest version of Ockam Command on your workstation, and enroll your machine as an administrator in your Ockam Project.

- Generate enrollment tickets for the Ockam Nodes that will create each end of our Ockam Portal.

- Create a new isolated resource group, VNet, Azure OpenAI service and all other dependencies within Azure required to complete the demo.

- Run an Ockam Node within Azure that mutually authenticated clients can later use to securely and privately access the Azure OpenAI service.

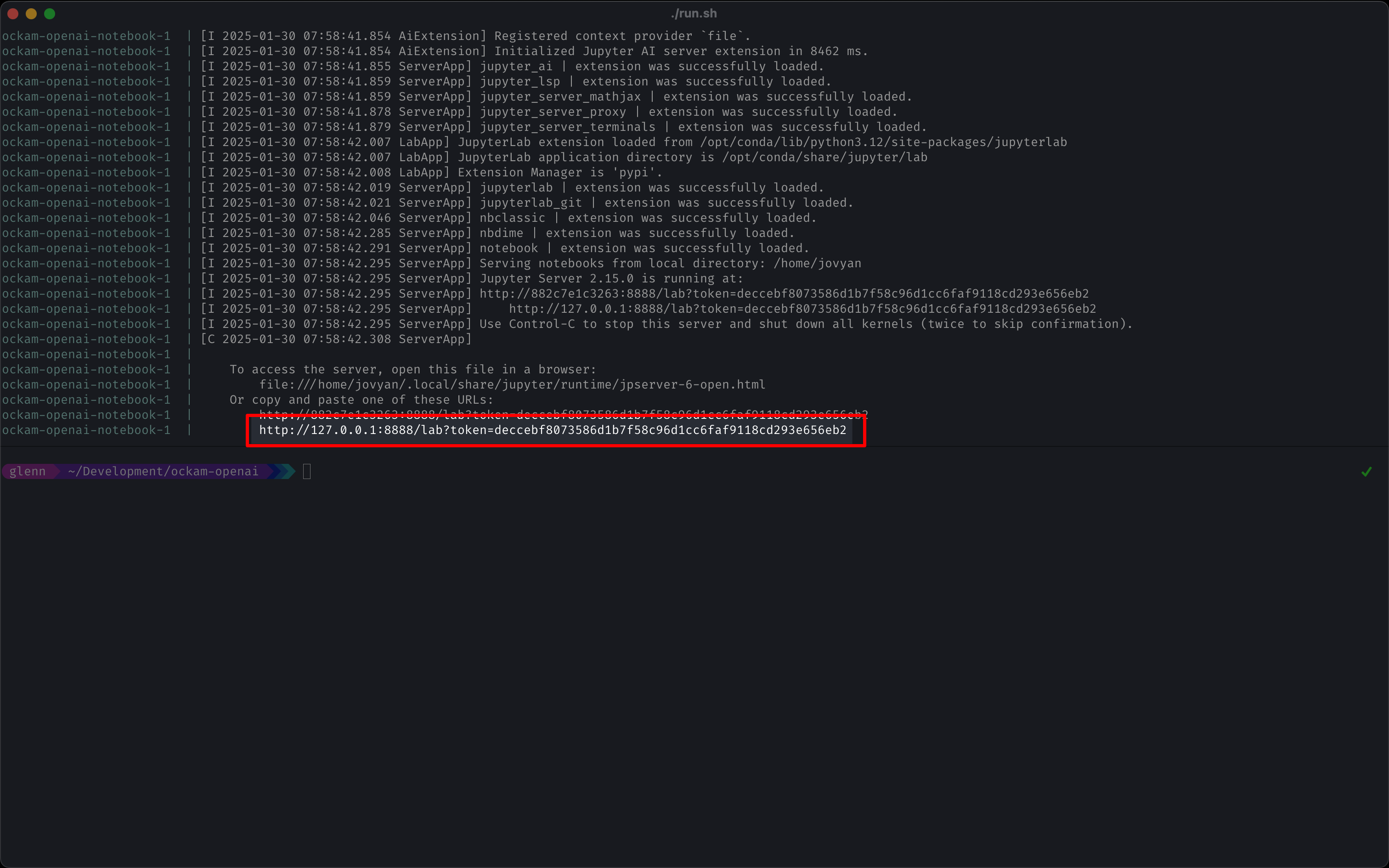

- Start an interactive playground on

https://127.0.0.1:8888

- A Docker Compose configuration file that will start a container that:

- Runs an instance of Jupyter Lab to serve as our interactive playground to load data and communicate with the Azure OpenAI service.

- Includes a notebook with all the commands and prompts I used to interact with the LLM.

- Automatically start an Ockam Node that's pre-configured to create an Ockam Portal to the Azure OpenAI service.

Prerequisites

Before running the script below you ensure you have an active Ockam account. If you don't already have an account, you can sign up now to start a 14-day trial.

To automatically provision everything clone the Ockam GitHub repository

and within the /examples/command/portals/ai/azure_openai_jupyter directory run:

_10./run.sh

This will provision the required resources within Azure and then start the Docker container that is running Ockam + Jupyter Lab. The log output will include a signed URL that you when you visit in your browse will automatically log you in. The URL will look like the one in this screenshot:

Once logged in, in the file explorer on the left, click

Notebooks > azure-openai.ipynb

to view the notebook with all of the commands required to communicate with

the Azure OpenAI service. You can run all of the commands and see the output

live by selecting Run > Run All Cells.

You've just securely connected your private workstation to a private Azure OpenAI endpoint, loaded in some private data, and started the interaction loop between AI and your data. It took only a few minutes! Feel free to keep playing with what you've setup, load in other data sources, experiment with what is possible.

Once you're finished with the demo you can remove all the created resources by running:

_10./run.sh cleanup

Let's dig into what this script has done so you can understand how to replicate something similar for your own needs.

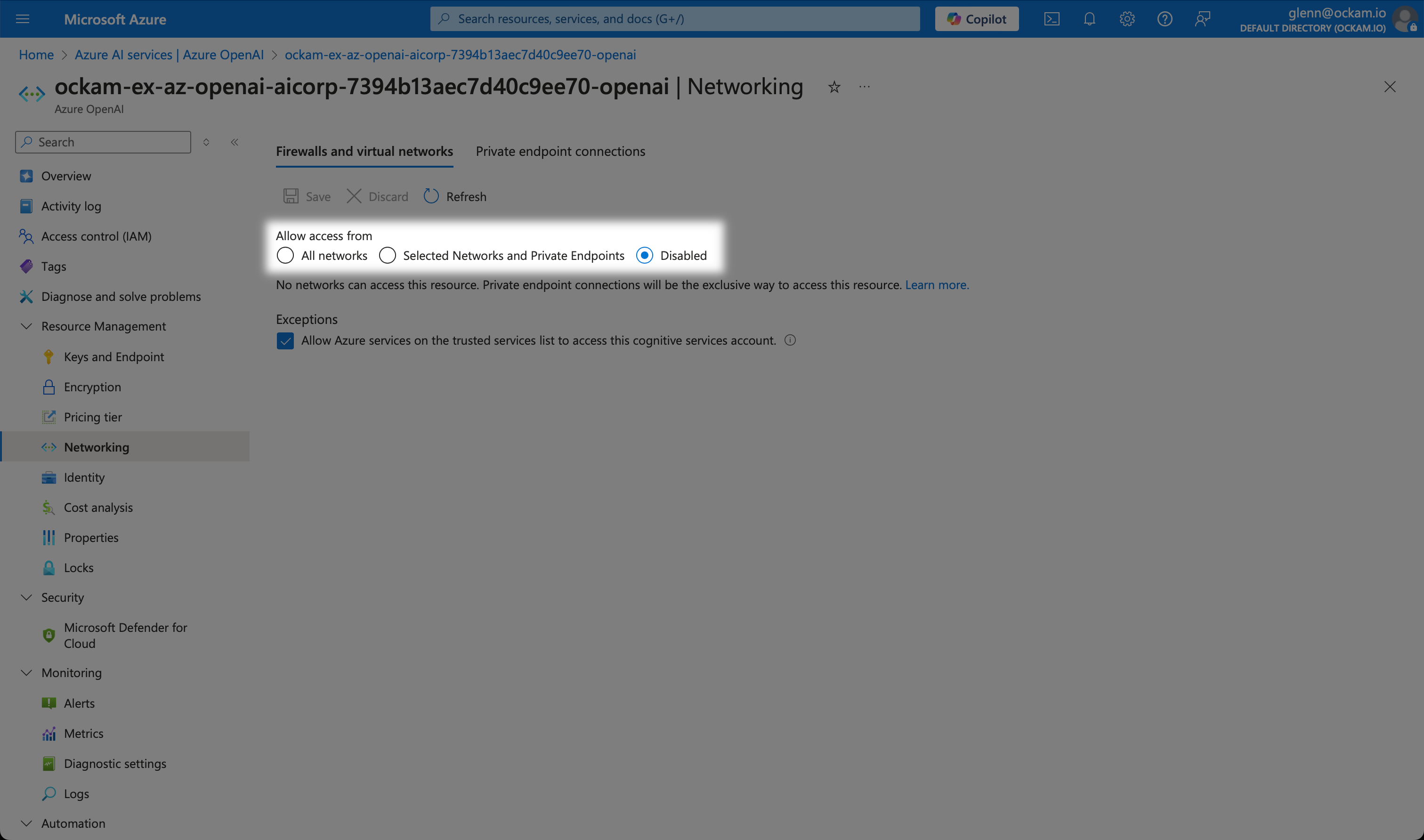

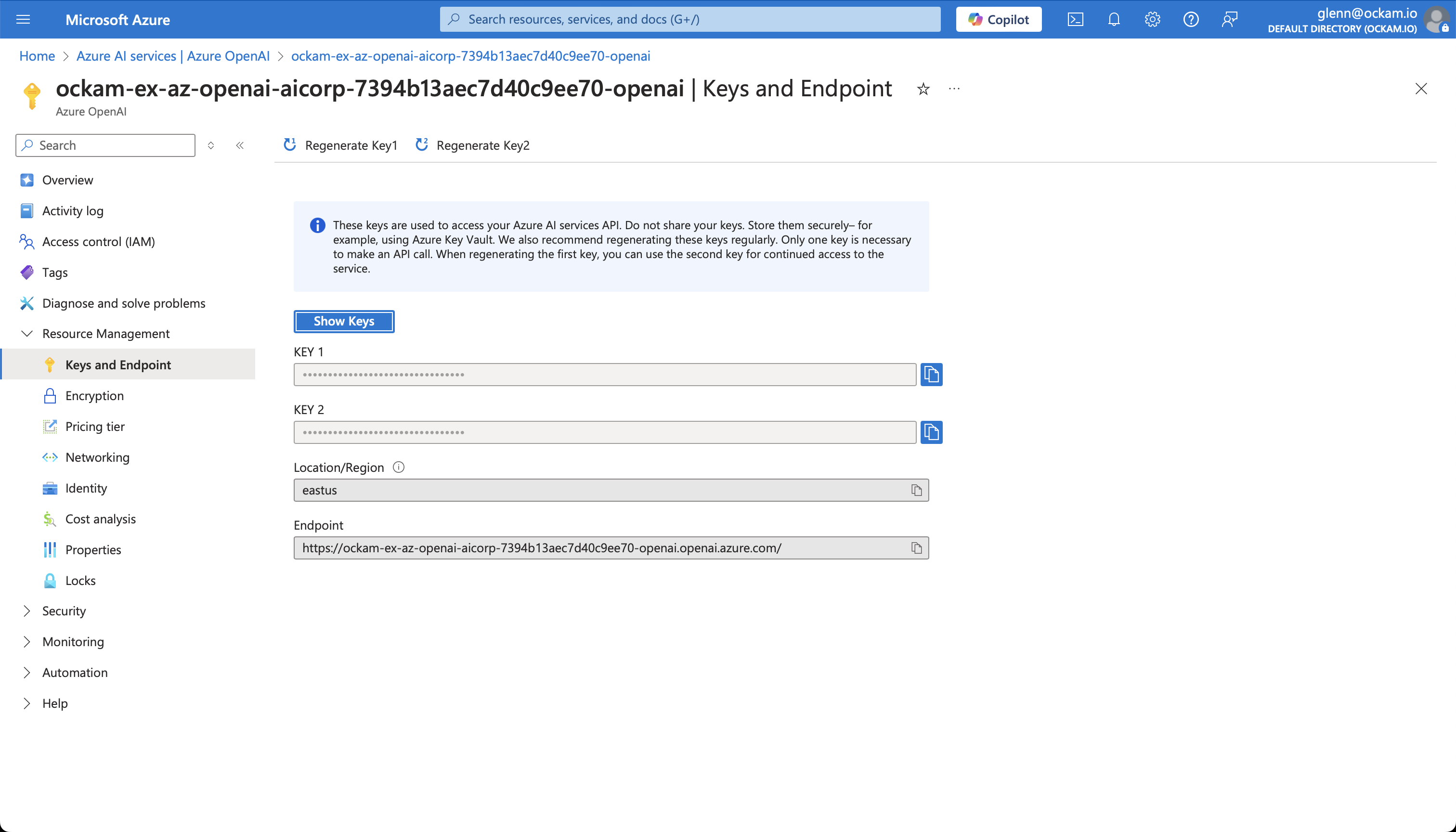

Setup a private Azure OpenAI GPT4 endpoint

The run.sh script will provision a new Azure OpenAI service in your Azure account,

and then isolate it from both the internet and any other services by

having all network access disabled:

It then creates a private endpoint that we can use to securely access the OpenAI service over Azure Private Link.

The reason we're using the Azure OpenAI service here is because of the level of isolation and privacy Azure OpenAI is able to offer. Our data isn't available to other customers, isn't shared with OpenAI, and isn't used to improve models.

Setup Ockam

Start an Ockam node next to Azure OpenAI

Start an Ockam node next to your data

Now that the two nodes are running, the Azure OpenAI service is available on

localhost:443 within the Docker container running our local workstation. All

the systems are still private, we get the benefit of Azure running the models

on infrastructure that is best suited for running LLMs, but everything looks

like it's running locally. Even though explaining how all the magic works takes

a while it can take only a few milliseconds to establish an Ockam Portal.

These connections are so quick and easy to setup!

Next steps

If you've not been following along by running the demo then this is a great time to sign up for Ockam and give it a try. If you'd like to explore some other capabilities of Ockam I'd recommend:

Next Article

Access a Snowflake stage with WebDAV